- Home

- Transportation

- Transportation Opinion

- No, Driverless Cars Aren't Far Safer Than Human Drivers

No, Driverless Cars Aren't Far Safer Than Human Drivers

Self-driving cars have claimed their first pedestrian fatality, a woman in Tempe, Arizona, who was struck and killed by an Uber vehicle travelling in autonomous mode. Weren't self-driving cars supposed to be safer than those piloted by fallible humans?

And who says they aren't? As many on social media rushed to point out, more than 37,000 people were killed by human-piloted vehicles in 2016. Compared with that, one pedestrian fatality, however sad, looks pretty good.

This argument is appealing. Unfortunately, it's wrong.

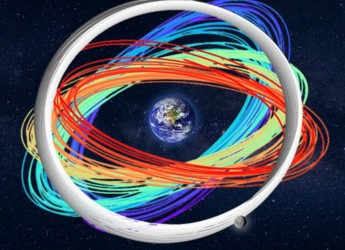

Motor vehicle fatalities are measured in terms of "vehicle miles travelled," which is just what it sounds like. In 2016, there were 1.18 fatalities for every 100 million miles that Americans drove. Since Americans drove nearly 3.2 trillion miles that year, that still added up to tens of thousands of deaths.

To know whether self-driving cars are safer than the traditional kind, you'd have to know how many miles they travelled before incurring this first fatality. And the answer is " fewer than 100 million" - a lot fewer. Waymo, the industry leader, recently reported logging its 4 millionth mile of road travel, with much of that in Western states that offer unusually favourable driving conditions. Uber just reached 2 million miles with its autonomous program. Other companies are working on fully autonomous systems, but adding them all together couldn't get us anywhere close to 100 million. (The numbers go up if you add Tesla's autopilot, but that system has more limited capabilities, and fatality statistics don't necessarily get any clearer - or more favourable - if you do.)

One fatality at these numbers of road-miles driven does not suggest, to put it mildly, a safety improvement over humans. It's more like dramatic step backward, or if you like, a high-speed reverse.

Which is not to say that we should pull the plug on autonomous driving. For one thing, regular old-fashioned cars were none too safe when they first arrived on American roads. In 1921, the first year for which such data is available, there were 24 deaths for every 100 million vehicle miles travelled. Over time, improved cars, drivers and roads reduced that figure by nearly 95 percent. Presumably, self-driving cars can also improve.

Given their other benefits, it's worth working for those improvements, regardless of whether these cars are currently less safe than human drivers. Think of all the elderly forced to leave their homes when they can no longer drive, the people whose disabilities make it hard or impossible for them to operate a car, the barroom drunks who get behind the wheel because there's no easy way to get home. Autonomous vehicles promise to solve these major problems; we should pursue that promise, even at the temporary cost of some road safety now.

Especially since it's not clear that there is actually a cost in road safety. The glib insistence that self-driving cars are safer than human drivers is not well-founded - but neither is a counterreaction that insists that they're obviously much more dangerous.

Even if you could design a system that you knew would have one fatal accident every hundred million miles on average (roughly the same as a human driver), that wouldn't mean that the car would strike and kill someone just as the odometer hit 100 million. The accident might occur on mile 5 million, or mile 99 million - or heck, you might get lucky and not have a fatality until mile 115 million. It's not possible to confidently assess the risk of an accident based on a single event like this. Statistical regularities that are apparent in large data pools get lost in the noise when the sample is too small.

We won't know how dangerous self-driving cars are compared with human drivers until they've driven billions more miles. At the moment, we just know that they can kill people, not how often they will. And that's a possibility that advocates for self-driving cars should have prepared the public for better than they have.

Enthusiasts for autonomous vehicles have been a little too quick to respond to safety concerns by pointing out how many accidents human drivers have - or by noting that self-driving cars don't text, or drive drunk, or fall asleep at the wheel. All true, but as they drive more and more miles, we may discover that they have problems humans don't.

Uber has already pulled its autonomous cars off the road in response to this tragedy. If it hadn't, it seems likely that the public pressure to do so would have been deafening. The company - and advocates of autonomous vehicles more generally - will need to put in a lot of work over the next few months restoring public faith in this technology.

Luckily, software systems can be re-engineered; even if self-driving cars aren't currently safer than a human driver, there's good reason to expect that one day they will be. But we've done that future no service by talking as if it had definitely already arrived. If we tell people that self-driving cars are perfectly safe, and that turns out not to be true, the backlash could drive these vehicles off the road before they're able to deliver on their promise.

© The Washington Post 2018

Get your daily dose of tech news, reviews, and insights, in under 80 characters on Gadgets 360 Turbo. Connect with fellow tech lovers on our Forum. Follow us on X, Facebook, WhatsApp, Threads and Google News for instant updates. Catch all the action on our YouTube channel.

Related Stories

- Samsung Galaxy Unpacked 2026

- iPhone 17 Pro Max

- ChatGPT

- iOS 26

- Laptop Under 50000

- Smartwatch Under 10000

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Latest Mobile Phones

- Compare Phones

- Realme P4 Lite

- Vivo V70

- Vivo V70 Elite

- Google Pixel 10a

- Tecno Camon 50

- Tecno Camon 50 Pro

- Lava Bold N2

- Vivo V60 Lite 4G

- Asus Vivobook 16 (M1605NAQ)

- Asus Vivobook 15 (2026)

- Infinix Xpad 30E

- Brave Ark 2-in-1

- Amazfit T-Rex Ultra 2

- boAt Chrome Iris

- Xiaomi QLED TV X Pro 75

- Haier H5E Series

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)

![[Partner Content] OPPO Reno15 Series: AI Portrait Camera, Popout and First Compact Reno](https://www.gadgets360.com/static/mobile/images/spacer.png)