OPINION

Cognitive Computing Isn't Just a Buzzword, It's the Next Big Thing in Technology

Advertisement

Every so often tech reaches a place where it seems as though just making things faster won't really make any difference. This is especially true of more unglamorous components such as processors. Manufacturers can go on making them faster and cheaper, but it becomes harder and harder to sell customers new PCs or phones on the basis of speed alone.

Already, we see that the high-end market has moved away from advertising clock speeds. It's the big screens, software, cameras and high-speed data pipes that are selling upgrades now. Brand image and the intangible "experience" of owning a particular device are promoted far more than its raw capabilities - the fact that every top-tier phone now has to have a metal body is the perfect example of this.

Most often when this happens, a new "killer app" is required to create fresh demand. Sometimes the hardware, software and infrastructure required to turn vision into reality aren't all ready when they're needed. It's another matter that some ideas aren't accepted for socio-cultural reasons (such as Google Glass), some just don't catch on (wireless charging should have been a huge deal years ago) and some are solutions to problems that don't exist (does anyone remember digital photo frames?).

One developing bit of technology that promises to be a massive game-changer for the tech industry and for society as a whole is cognitive computing. Already one of today's darling buzzwords, cognitive computing is the vehicle through which companies are promising to usher in a whole new era of personal technology.

The term (conceptually related to "artificial intelligence", "deep learning", "neural network" and "machine learning", amongst others) refers to a computer's ability to not only process information in pre-programmed ways, but also look for new information, craft its own instructions to interpret it, and then go ahead and take whatever actions it thinks fit. By constantly ingesting new information no matter where it comes from or what format it comes in, the computer is able to "think" about problems and come up with plausible solutions which the rigidly systematic computers we know and love/hate today simply cannot do.

Advertisement

The end goal is to allow technology to adapt to its user, making context-appropriate decisions depending on time, place and other factors. Our new-age digital assistants would proactively seek out information, understand how to interpret it in context, and then take appropriate action. Most importantly, such things would learn from their users' habits and patterns, providing helpful pre-emptive information: Wikipedia refers to it as "machine-aided serendipity" which gets the point across nicely.

What could devices with cognitive capabilities do? For starters, they'd be able to recognise users based on mannerisms, posture, grip and other subtle parameters, improving security and reducing the threats of theft and loss. They could detect malware earlier, based on signs of unusual behaviour. Natural language input could become far more fluid than is possible today. Healthcare apps could allow diagnoses and treatments to be completely personalised and integrated into a person's routine. Autonomous cars could learn when and how to account for human error, preventing collisions and initiating lifesaving measures if needed. Entire smart cities could organise resources around people's movements and consumption patterns.

Advertisement

The "neural network" part of it refers to the device's ability to join dots, i.e. pieces of information it has been programmed with and those it has decided on its own are important. Just like synapses, new pathways need to be formed between seemingly arbitrary, disjointed bits of data. When a device realises it doesn't have enough information on which to base a decision, it should be able to acquire more on its own. Patterns can be compared to those of other users in order to build a massive common intelligence, the way human brains work.

The possibilities are endless - AI that's more Star Trek than Siri is only the beginning. Significant progress has already been made, and now the industry seems ready to take one a massive leaps. Qualcomm showed off a handful of demos based on its upcoming Zeroth platform at this year's Mobile World Congress. They were running on existing hardware, but the company made the tantalizing promise that its next flagship processor, the Snapdragon 820 expected by the end of this year, would be designed for cognitive computing as a primary application.

Advertisement

One demo involved photo optimisation - a test device was able to recognise multiple characteristics of scenes, such as how much sky was visible, whether there were people or inanimate objects in the foreground, what kind of lighting was affecting the composition, and much more. In some cases it got as specific as "watch on wrist" for a closeup, and in others it not only detected people in a scene but also recognised who they were and tagged them automatically. The camera app could compare what it was seeing to things it already knew about, allowing it to make judgment calls and adjust settings on the fly in order to get each shot just right. In another demo, a test device was able to recognise handwriting and turn written notes into text, also in real-time.

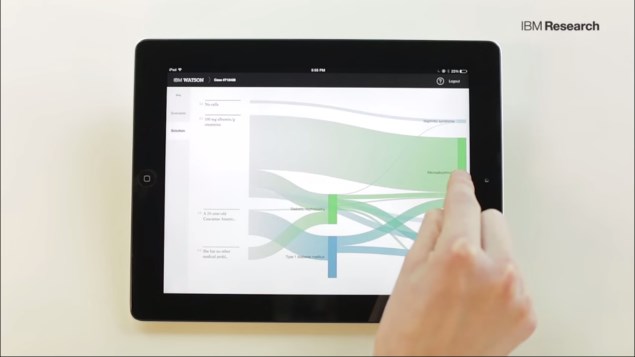

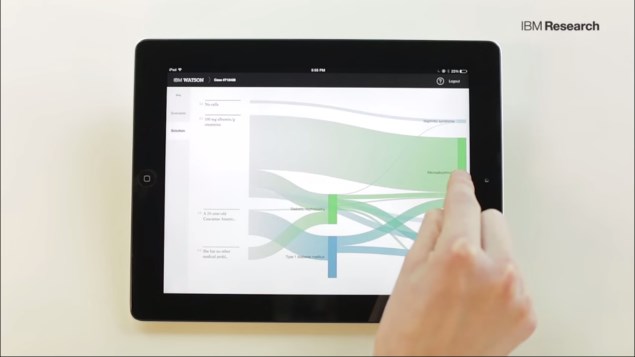

Qualcomm isn't the only company invested in cognitive computing platforms - not by far. IBM's Watson might be one of the most famous, and has moved on from game shows to medical research. Intel has been showcasing its RealSense tools and vision of "perceptual computing" for a long time now, and Nvidia is positioning its upcoming Pascal architecture as ideal for the computational requirements of deep learning. If you recently tried Microsoft's viral how-old.net age-guessing tool, you've already had a brush with machine learning algorithms - what might be amusing to causal users is actually a groundbreaking tech demo which shows off the kind of power that developers can now tap into.

Today's single-purpose demos show us an outline of a possible future in which such technology becomes inextricably woven into our lives. We can only imagine what new capabilities might come out of all our devices talking to each other and constantly learning more about the world around them. AI in whatever forms it takes will augment human capabilities, offering new support structures to help in problem solving and decision making.

Once again science fiction points the way forward. Cognitive computing is real and is about to become very mainstream. As with all technology, it's what we make of it that will define our future.

Disclosure: Qualcomm sponsored the correspondent's flights and hotel for MWC 2015 in Barcelona.

For the latest tech news and reviews, follow Gadgets 360 on X, Facebook, WhatsApp, Threads and Google News. For the latest videos on gadgets and tech, subscribe to our YouTube channel. If you want to know everything about top influencers, follow our in-house Who'sThat360 on Instagram and YouTube.

Further reading:

Cognitive computing, Artificial intelligence, Deep learning, Facebook, IBM, Intel, Intel RealSense, Machine learning, Microsoft, Neural networks, Nvidia, Nvidia Pascal, Qualcomm, Snapdragon 820, Zeroth

Advertisement