- Home

- Science

- Science News

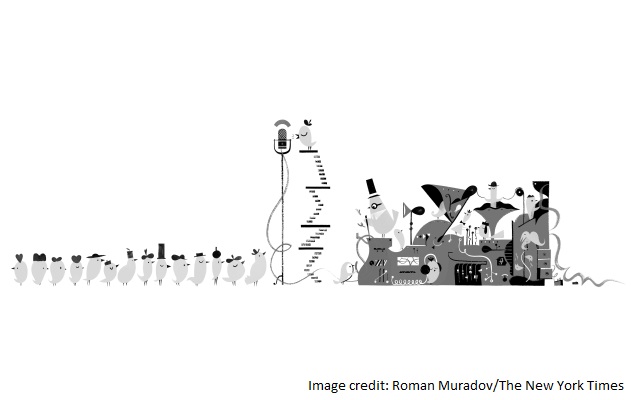

- Why Computers Won't Replace You Just Yet

Why Computers Won't Replace You Just Yet

- "40% of smartphone users connect to Internet immediately upon awakening, before leaving bed. #TheFuture http://bit.ly/WYRz39 @TheAtlantic"

- "Cybercrime market now greater than annual global market for marijuana, cocaine, and heroin #TheFuture http://bit.ly/WYRz39 @TheAtlantic"

Can you guess which one was retweeted more often?

Three computer scientists, Chenhao Tan, Lillian Lee and Bo Pang, have built an algorithm that also makes these guesses, as described in a recent paper, and the results are impressive. (The answer: The first one got more retweets).

That an algorithm can make these kinds of predictions shows the power of "big data." It also illustrates its fundamental limitation: Specifically, guessing which tweet gets retweeted is significantly easier than creating one that gets retweeted.

To see why, it is useful to see how the algorithm was built. It used a data set of around 11,000 paired tweets - two tweets about the same link sent by the same person - to learn which word patterns looked predictive and then tested whether these patterns hold in new data. This is usually how "smart" algorithms are created from big data: Large data sets with known correct answers serve as a training bed and then new data serves as a test bed - not too different from how we might learn what our co-workers find funny.

The end result is an algorithm that guesses well. It can guess which tweet gets retweeted about 67 percent of the time, beating humans, who on average get it right only 61 percent of the time.

This is striking when you think of the enormous handicap the algorithm has. Yes, it could learn from 11,000 pairs of tweets. But it has no other knowledge. It has none of the wealth of contextual information you have accumulated over the years. It has never heard friends' complaints about spouses checking their phone the first thing in the morning. It does not have a sense of humor or know what a pun is. It does not know what makes a turn of phrase elegant or awkward.

It must rely on a few crude features, such as length of the tweet, the presence of certain words ("retweet" or "please") or the use of indefinite articles. Yet with so little, it does so much. This is one of the miracles of big data: Algorithms find information in unexpected places, uncovering "signal" in places we thought contained only "noise."

But we do not need to roll out the welcome mat for our machine overlords just yet. While the retweet algorithm is impressive, it has an Achilles' heel, one shared by all prediction algorithms.

We care about predicting retweets mainly because we want to write better tweets. And we assume these two tasks are related. If Netflix can predict which movies I like, surely it can use the same analytics to create better TV shows. But it doesn't work that way. Being good at prediction often does not mean being better at creation.

One barrier is the oldest of statistical problems: Correlation is not causation. Changing a variable that is highly predictive may have no effect. For example, we may find the number of employees formatting their résumés is a good predictor of a company's bankruptcy. But stopping this behavior is hardly a fruitful strategy for fending off creditors.

The causality problem can show up in very subtle ways. For example, the tweet predictor finds that longer tweets are more likely to be retweeted. It seems unlikely that you should therefore write longer tweets. The old adage that "less is more" is, if anything, truer in this medium. Instead, length is probably a good predictor because longer tweets have more content. So the lesson is not "make your tweets longer" but "have more content," which is far harder to do.

Another problem comes from an inherent paradox in predicting what is interesting. Rarity and novelty often contribute to interestingness - or at least to drawing attention. But once an algorithm finds those things that draw attention and starts exploiting them, their value erodes. When few people do something, it catches the eye; when everyone does it, it is ho-hum. Calling a food "artisanal" was eye-catching, until it became so common that we're not far away from an artisanal plunger.

In the Twitter example, the use of the words "retweet" or "please" were predictive. But if everyone starts asking you to "Share this article. Please," will it continue to work?

Finally, and perhaps most perversely, some of the most predictive variables are circular.

For example, in another paper, the computer scientists Lars Backstrom, Jon Kleinberg, Lillian Lee and Cristian Danescu-Niculescu-Mizil predict which posts on Facebook generate many comments. One of the most predictive variables is the time it takes for the first comment to arrive: If the first comment arrives quickly, then the post is likely to generate many more comments in the future. This helps Facebook decide which posts to show you. But it does not help anyone to write a highly commented post. It says: "Want to write a post people like? Well, write one that people like!"

These limitations are not meant to take away from the power of predictive algorithms. It is truly amazing, for example, how well an algorithm can predict which tweets will get retweeted.

It does remind us to moderate expectations. Arthur C. Clarke once posited three laws of prediction. The third is apropos here: "Any sufficiently advanced technology is indistinguishable from magic." Because algorithms armed with big data can do some impressive things - self-driving cars! - we can too easily treat them like magic and overstate what they do. This can lead to extrapolations that are simply not realistic. (Soon computers will be doing my job!) It can create fears that are ill-founded. (Soon companies will know enough about me to get me to buy anything!) It can create expectations that we are very far from meeting. (Soon computers will write movies!)

The new big-data tools, amazing as they are, are not magic. Like every great invention before them - whether antibiotics, electricity or even the computer itself - they have boundaries in which they excel and beyond which they can do little.

By the way, share this article. Please.

© 2014 New York Times News Service

Get your daily dose of tech news, reviews, and insights, in under 80 characters on Gadgets 360 Turbo. Connect with fellow tech lovers on our Forum. Follow us on X, Facebook, WhatsApp, Threads and Google News for instant updates. Catch all the action on our YouTube channel.

Related Stories

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

- Realme Neo 8

- OPPO Reno 15 FS

- Red Magic 11 Air

- Honor Magic 8 RSR Porsche Design

- Honor Magic 8 Pro Air

- Infinix Note Edge

- Lava Blaze Duo 3

- Tecno Spark Go 3

- HP HyperX Omen 15

- Acer Chromebook 311 (2026)

- Lenovo Idea Tab Plus

- Realme Pad 3

- HMD Watch P1

- HMD Watch X1

- Haier H5E Series

- Acerpure Nitro Z Series 100-inch QLED TV

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)