- Home

- Science

- Science News

- Magnetic Chips Can Boost Computer Energy Efficiency: Study

Magnetic Chips Can Boost Computer Energy Efficiency: Study

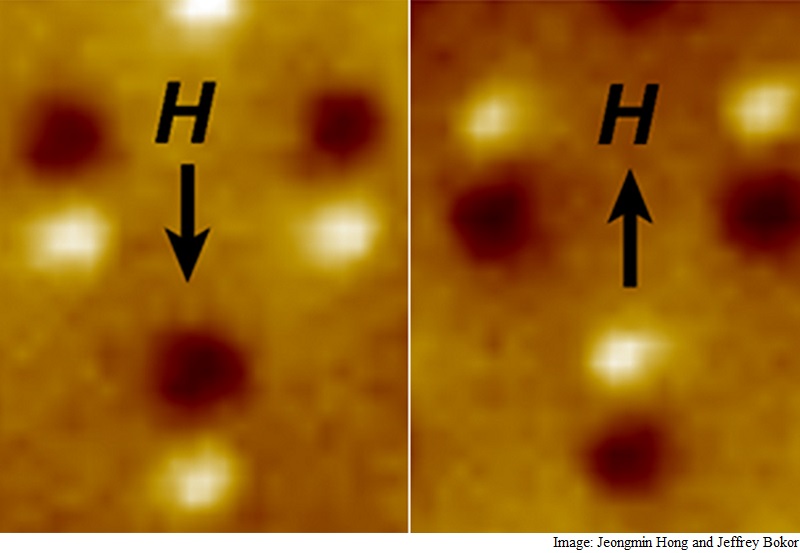

The findings mean that dramatic reductions in power consumption are possible as much as one-millionth the amount of energy per operation used by transistors in modern computers.

This is critical for mobile devices, which demand powerful processors that can run for a day or more on small, lightweight batteries.

On a larger industrial scale, as computing increasingly moves into 'the cloud', the electricity demands of the giant cloud data centres are multiplying, collectively taking an increasing share of the country's and world's electrical grid.

"We wanted to know how small we could shrink the amount of energy needed for computing," said senior author Jeffrey Bokor, a UC Berkeley professor of electrical engineering and computer sciences.

"The biggest challenge in designing computers and, in fact, all our electronics today is reducing their energy consumption," he added in a paper appeared in the peer-reviewed journal Science Advances.

Lowering energy use is a relatively recent shift in focus in chip manufacturing after decades of emphasis on packing greater numbers of increasingly tiny and faster transistors onto chips.

"Making transistors go faster was requiring too much energy," said Bokor, who is also the deputy director the Centre for Energy Efficient Electronics Science, a Science and Technology Centre at UC Berkeley funded by the National Science Foundation. "The chips were getting so hot they'd just melt."

Magnetic computing emerged as a promising candidate because the magnetic bits can be differentiated by direction, and it takes just as much energy to get the magnet to point left as it does to point right.

For details of the latest launches and news from Samsung, Xiaomi, Realme, OnePlus, Oppo and other companies at the Mobile World Congress in Barcelona, visit our MWC 2026 hub.

Related Stories

- Samsung Galaxy Unpacked 2026

- iPhone 17 Pro Max

- ChatGPT

- iOS 26

- Laptop Under 50000

- Smartwatch Under 10000

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Latest Mobile Phones

- Compare Phones

- Nothing Phone 4a Pro

- Infinix Note 60 Ultra

- Nothing Phone 4a

- Honor 600 Lite

- Nubia Neo 5 GT

- Realme Narzo Power 5G

- Vivo X300 FE

- Tecno Pop X

- MacBook Neo

- MacBook Pro 16-Inch (M5 Max, 2026)

- Tecno Megapad 2

- Apple iPad Air 13-Inch (2026) Wi-Fi + Cellular

- Tecno Watch GT 1S

- Huawei Watch GT Runner 2

- Xiaomi QLED TV X Pro 75

- Haier H5E Series

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)