- Home

- Science

- Science News

- Google Develops AI Than Can Tell Where Any Photo Was Taken

Google Develops AI Than Can Tell Where Any Photo Was Taken

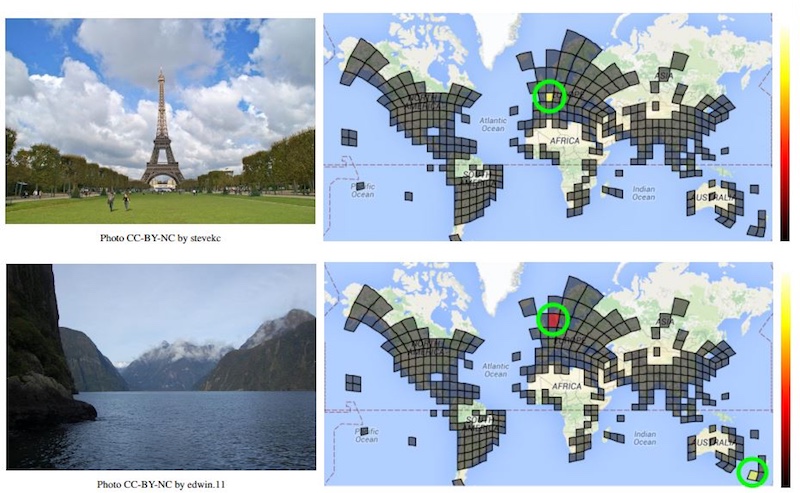

Google may soon not need to look into geo-tagging information to know where a photo was taken. The Mountain View-based company has devised a new deep-learning machine called PlaNet that is able to tell the location by just analysing the pixels of the photo.

A new effort inside Google's campus led by Tobias Weyland, a computer vision scientist at Google, has helped them create a neural network that has been fed with over 91 million geo-tagged images from across the planet to make it capable enough that it can spot patterns and tell the place where the image was taken. It can also determine different landscapes, locally typical objects, and even plants and animals in photos.

PlaNet analyses the pixels of the photos and then cross references them with the millions of images it has in its database to look for similarities. While it could sound like a tedious job to many of us, but for a neural network that only consumes about 377MB space, that is not really an issue.

In a trial run in which it was tested with 2.3 million images, PlaNet was able to tell the country of origin with 28.4 percent accuracy, but more interestingly, the continent of origin with 48 percent accuracy. PlaNet is also able to determine the location of about 3.6 percent of images at street-level accuracy and 10.1 percent of city-level accuracy. Sure, it isn't 100 percent correct yet, but neither are you. Besides, PlaNet is getting better.

In a number of tests, PlaNet has also beaten the best of us, humans. The reason, Weyand explains to MIT Tech Review, is that PlaNet has seen more places than any of us have, and has "learned subtle cues of different scenes that are even hard for a well-travelled human to distinguish."

Get your daily dose of tech news, reviews, and insights, in under 80 characters on Gadgets 360 Turbo. Connect with fellow tech lovers on our Forum. Follow us on X, Facebook, WhatsApp, Threads and Google News for instant updates. Catch all the action on our YouTube channel.

Related Stories

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

- OPPO A6v 5G

- OPPO A6i+ 5G

- Realme 16 5G

- Redmi Turbo 5

- Redmi Turbo 5 Max

- Moto G77

- Moto G67

- Realme P4 Power 5G

- HP HyperX Omen 15

- Acer Chromebook 311 (2026)

- Lenovo Idea Tab Plus

- Realme Pad 3

- HMD Watch P1

- HMD Watch X1

- Haier H5E Series

- Acerpure Nitro Z Series 100-inch QLED TV

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)