- Home

- Science

- Science Features

- Five Things to Know About Artificial Intelligence, and Its Use

Five Things to Know About Artificial Intelligence, and Its Use

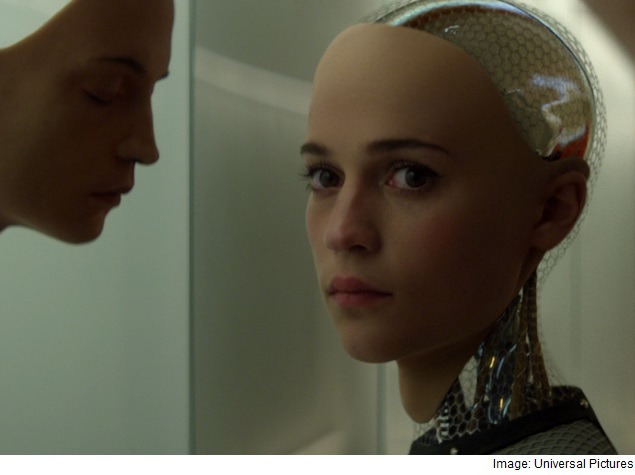

Just how far off in the future is a robot like the fictional Ava? And how worried should we be about warnings issued Tuesday that artificial intelligence could be used to build weapons with minds of their own?

Five things to know about artificial intelligence:

Scientists predict weapons 'within years'

Autonomous weapons that can search and destroy targets could be fielded quickly, according to an open letter released Tuesday and signed by hundreds of scientists and technology experts.

"If any major military power pushes ahead with (artificial intelligence) weapon development, a global arms race is virtually inevitable, and the endpoint of thistechnological trajectory is obvious: Autonomous weapons will become the Kalashnikovs of tomorrow," said the letter, which references the Russian assault rifle in use around the world. "Unlike nuclear weapons, they require no costly or hard-to-obtain raw materials, so they will become ubiquitous and cheap for all significant military powers to mass-produce."

Ava-like robots a long way off

Robots with Ava's sophistication are at least 25 years away and perhaps decades beyond that to realize, according to the experts. The gap between what's possible today and what Hollywood puts on the movie screen is huge, said Oren Etzioni, chief executive officer of the Allen Institute for Artificial Intelligence in Seattle. "Our robots can't even grip things today," he said. "Nasa still has to control spacecraft remotely."

(Also see: Tech Leaders Warn Over 'Killer Robots')

The most challenging aspect of an Ava-like robot is the hardware, said Toby Walsh, a professor of artificial intelligence at the University of New South Wales in Sydney, Australia, and at Australia's Centre of Excellence for Information CommunicationTechnologies.

"It might be 50 to 100 years to have this sort of hardware," Walsh said. "But the software is likely less than 50 years away."

I spy

Facial recognition technology that could be used to spot targets already performs better than humans do, said Bart Selman, a computer science professor at Cornell University in New York. That capability could be harnessed with the video taken by surveillance cameras to hunt people down autonomously. "That's a bit scary," Selman said.

Selman, Etzioni and Walsh signed Tuesday's letter.

The upside of AI

Most artificial-intelligence researchers are focused on developing technologies that can benefit society, including tools that can make battlefields safer, prevent accidents and reduce medical errors. They're calling for a "ban on offensive autonomous weapons beyond meaningful human control," according to the letter. "The time for society to discuss this issue is right now," Etzioni said. "It's not tomorrow."

US leads, China in pursuit

The United States is the leader in the development of artificial intelligence for military and civilian applications. But China isn't far behind, Selman said. "There's no doubt they are investing in science and technology to catch up," he said.

Any military that knows they might have to face these weapons is going to be working on them themselves, Walsh said. "If I was the Chinese, I would be working strongly on them. This is why we need a ban now to stop this arms race now."

Officials at the Pentagon's Defense Advanced Research Projects Agency weren't immediately available for comment. But artificial-intelligence projects are being pursued to provide the U.S. military with "increasingly intelligent assistance," according to an information paper on the agency's website. One program is aimed at providing a software system that pulls information out of photos by allowing the user to ask specific questions that range from whether a person is on the terrorist watch list or where a building is located.

For the latest tech news and reviews, follow Gadgets 360 on X, Facebook, WhatsApp, Threads and Google News. For the latest videos on gadgets and tech, subscribe to our YouTube channel. If you want to know everything about top influencers, follow our in-house Who'sThat360 on Instagram and YouTube.

Related Stories

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

- Vivo Y19 5G

- iQOO Z10 Turbo Pro

- iQOO Z10 Turbo

- CMF by Nothing Phone 2 Pro

- Motorola Edge 60

- Motorola Edge 60 Pro

- Motorola Razr 60

- Motorola Razr 60 Ultra

- Asus ROG Zephyrus G16 (2025)

- Asus ROG Zephyrus G14 (2025)

- Honor Pad GT

- Vivo Pad SE

- Moto Watch Fit

- Honor Band 10

- Xiaomi X Pro QLED 2025 (43-Inch)

- Xiaomi X Pro QLED 2025 (55-Inch)

- Asus ROG Ally

- Nintendo Switch Lite

- Toshiba 1.8 Ton 5 Star Inverter Split AC (RAS-24TKCV5G-INZ / RAS-24TACV5G-INZ)

- Toshiba 1.5 Ton 5 Star Inverter Split AC (RAS-18PKCV2G-IN / RAS-18PACV2G-IN)