- Home

- Others

- Others News

- Ethics Dilemmas May Hold Back Autonomous Cars: Study

Ethics Dilemmas May Hold Back Autonomous Cars: Study

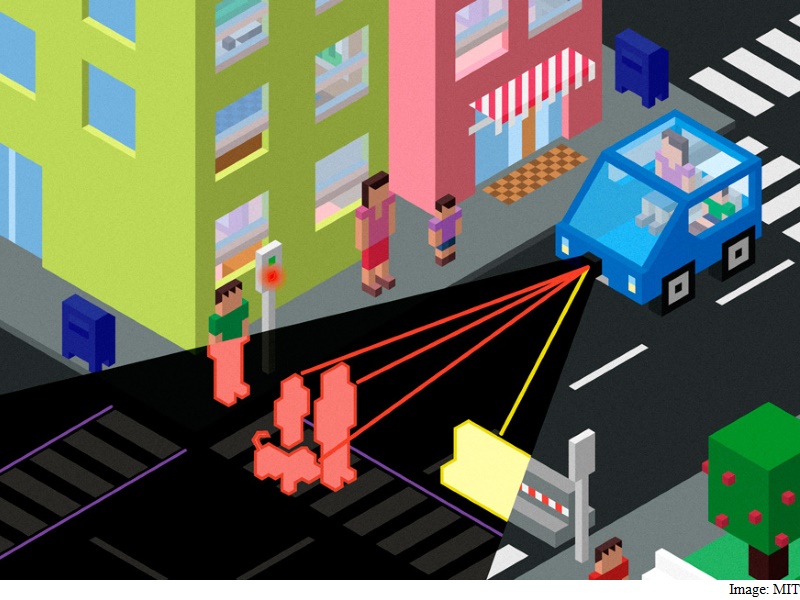

The looming arrival of self-driving vehicles is likely to vastly reduce traffic fatalities, but also poses difficult moral dilemmas, researchers said in a study Thursday.

Autonomous driving systems will require programmers to develop algorithms to make critical decisions that are based more on ethics than technology, according to the study published in the journal Science.

"Figuring out how to build ethical autonomous machines is one of the thorniest challenges in artificial intelligence today," said the study by Jean-Francois Bonnefon of the Toulouse School of Economics, Azim Shariff of the University of Oregon and Iyad Rahwan of the Massachusetts Institute of Technology.

"For the time being, there seems to be no easy way to design algorithms that would reconcile moral values and personal self-interest - let alone account for different cultures with various moral attitudes regarding life-life tradeoffs - but public opinion and social pressure may very well shift as this conversation progresses."

The researchers said adoption of autonomous vehicles offers many social benefits such as reducing air pollution and eliminating up to 90 percent of traffic accidents.

"Not all crashes will be avoided, though, and some crashes will require AVs to make difficult ethical decisions in cases that involve unavoidable harm," the researchers said in the study.

"For example, the AV may avoid harming several pedestrians by swerving and sacrificing a passerby, or the AV may be faced with the choice of sacrificing its own passenger to save one or more pedestrians."

Programming quandary

These dilemmas are "low-probability events" but programmers "must still include decision rules about what to do in such hypothetical situations," the study said.

The researchers said they are keen to see adoption of self-driving technology because of major social benefits.

"A lot of people will protest that they love driving, but us having to drive our own cars is responsible for a tremendous amount of misery in the world," Shariff told a conference call.

The programming decisions must take into account mixed and sometimes conflicting public attitudes.

In a survey conducted by the researchers, 76 percent of participants said that it would be more ethical for self-driving cars to sacrifice one passenger rather than kill 10 pedestrians.

But just 23 percent said it would be preferable to sacrifice their passenger when only one pedestrian could be saved. And only 19 percent they would buy a self-driving car if it meant a family member might be sacrificed for the greater good.

The responses show an apparent contradiction: "People want to live a world in which everybody owns driverless cars that minimize casualties, but they want their own car to protect them at all costs," said Rahwan.

"But if everybody thinks this way then we end up in a world in which every car will look after its own passenger's safety or its own safety and society as a whole is washed off."

Regulate or not?

One solution, the researchers said, may be for regulations that set clear guidelines for when a vehicle must prioritize the life of a passenger or others, but it's not clear if the public will accept this.

"If we try to use regulation to solve the public good problem of driverless car programming we would be discouraging people from buying those cars," Rahwan said.

"And that would delay the adoption of the new technology that would eliminate the majority of accidents."

In a commentary in Science, Joshua Greene of Harvard University's Center for Brain Science said the research shows the road ahead remains unclear.

"Life-and-death trade-offs are unpleasant, and no matter which ethical principles autonomous vehicles adopt, they will be open to compelling criticisms, giving manufacturers little incentive to publicize their operating principles," Greene wrote.

"The problem, it seems, is more philosophical than technical. Before we can put our values into machines, we have to figure out how to make our values clear and consistent. For 21st century moral philosophers, this may be where the rubber meets the road."

Get your daily dose of tech news, reviews, and insights, in under 80 characters on Gadgets 360 Turbo. Connect with fellow tech lovers on our Forum. Follow us on X, Facebook, WhatsApp, Threads and Google News for instant updates. Catch all the action on our YouTube channel.

Related Stories

- Samsung Galaxy Unpacked 2026

- iPhone 17 Pro Max

- ChatGPT

- iOS 26

- Laptop Under 50000

- Smartwatch Under 10000

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Latest Mobile Phones

- Compare Phones

- Leica Leitzphone

- Samsung Galaxy S26+

- Samsung Galaxy S26 Ultra

- Samsung Galaxy S26

- iQOO 15R

- Realme P4 Lite

- Vivo V70

- Vivo V70 Elite

- Asus TUF Gaming A14 (2026)

- Asus ProArt GoPro Edition

- Huawei MatePad Mini

- Infinix Xpad 30E

- Huawei Watch GT Runner 2

- Amazfit Active 3 Premium

- Xiaomi QLED TV X Pro 75

- Haier H5E Series

- Asus ROG Ally

- Nintendo Switch Lite

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAID5BN-INV)

- Haier 1.6 Ton 5 Star Inverter Split AC (HSU19G-MZAIM5BN-INV)