- Home

- Laptops

- Laptops News

- Computers as invisible as the air

Computers as invisible as the air

By John Markoff, New York Times | Updated: 5 June 2012 02:24 IST

Advertisement

The personal computer is vanishing.

Computers once filled entire rooms, then sat in the closet, moved to our desks, and now nestle in our pockets. Soon, the computer may become invisible to us, hiding away in everyday objects.

A Silicon Valley announcement last week hinted at the way computing technology will transform the world in the coming decade. Hewlett-Packard scientists said they had begun commercialising a Lilliputian switch that is a simpler -- and potentially smaller -- alternative to the transistor that has been the Valley's basic building block for the last half-century.

That means the number of 1's and 0's that can be stored on each microchip could continue to increase at an accelerating rate. As a consequence, each new generation of chip would continue to give designers of electronics the equivalent of a brand new canvas to paint on.

This is the fulfillment of Moore's Law, first described in the 1960s by Douglas Engelbart and Gordon Moore, which posits that computer power increases exponentially while cost falls just as quickly.

That's been the history of computing so far. Thirty-five years ago, Steve Jobs was one of the first to seize on the microprocessor chip to build personal computers. It was an idea that had been pioneered several years earlier by a group of researchers working with Alan Kay at Xerox's Palo Alto Research Center in the early 1970s. At the time it was a radical idea that was almost unthinkable: one person, one computer.

In the 1980s, another group of Xerox scientists proposed an even more radical idea: the personal computer was destined, like its predecessor the mainframe computer, for obsolescence. They called its successor "ubiquitous computing." The computer would simply melt away like the Cheshire Cat, and become imbedded in all the objects that make up daily life.

That is coming true: Our pens, pads of paper, cars, indeed virtually everything we use are becoming computer smart. And last week, Mr. Jobs introduced the new versions of already embedded technology, the iPod and Apple TV.

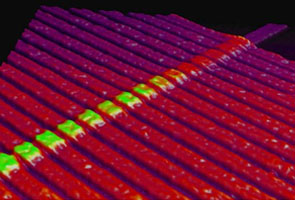

At H.P., the scientist who has led the quest for the new generation of ultrasmall switches, known as memristors, said that their arrival foretells a computing age of discovery that will parallel the productivity increases first brought about by the microprocessor.

"The thing that is happening right now is that we're drowning in data," said Stan Williams, director of H.P.'s Information and Quantum Systems Lab. "The amount of data is increasing at an absolutely ferocious pace, and unless we can catch up it will remain useless."

If he is right, and the memristor makes possible superdense computing memories -- one computer chip will hold as much data as an entire disk drive holds today.

Forecasting what this next-order-of-magnitude increase will mean is impossible, but it's easy to see what the last one created: entire new product categories -- digital music players, cameras and cellphones.

For Mr. Williams, the speed at which vast amounts of data will be accessed is as important as the amount of storage itself.

An example of what's possible is a project called Central Nervous System for the Earth, or CENSE, he said. Based on as many as a million sensors, CENSE will make it possible to create a far clearer picture of oil and gas reservoirs than previously possible.

The real risk is what happens if Moore's Law fails, said James Tour, a nanoscientist at Rice University.

"If Moore's Law stops, making semiconductors will eventually equal making cars or even shoes," he said, referring to products where performance doesn't increase routinely.

In other words, the only thing that will change about computing is the shape of the tail fins.

Computers once filled entire rooms, then sat in the closet, moved to our desks, and now nestle in our pockets. Soon, the computer may become invisible to us, hiding away in everyday objects.

A Silicon Valley announcement last week hinted at the way computing technology will transform the world in the coming decade. Hewlett-Packard scientists said they had begun commercialising a Lilliputian switch that is a simpler -- and potentially smaller -- alternative to the transistor that has been the Valley's basic building block for the last half-century.

That means the number of 1's and 0's that can be stored on each microchip could continue to increase at an accelerating rate. As a consequence, each new generation of chip would continue to give designers of electronics the equivalent of a brand new canvas to paint on.

This is the fulfillment of Moore's Law, first described in the 1960s by Douglas Engelbart and Gordon Moore, which posits that computer power increases exponentially while cost falls just as quickly.

That's been the history of computing so far. Thirty-five years ago, Steve Jobs was one of the first to seize on the microprocessor chip to build personal computers. It was an idea that had been pioneered several years earlier by a group of researchers working with Alan Kay at Xerox's Palo Alto Research Center in the early 1970s. At the time it was a radical idea that was almost unthinkable: one person, one computer.

In the 1980s, another group of Xerox scientists proposed an even more radical idea: the personal computer was destined, like its predecessor the mainframe computer, for obsolescence. They called its successor "ubiquitous computing." The computer would simply melt away like the Cheshire Cat, and become imbedded in all the objects that make up daily life.

That is coming true: Our pens, pads of paper, cars, indeed virtually everything we use are becoming computer smart. And last week, Mr. Jobs introduced the new versions of already embedded technology, the iPod and Apple TV.

At H.P., the scientist who has led the quest for the new generation of ultrasmall switches, known as memristors, said that their arrival foretells a computing age of discovery that will parallel the productivity increases first brought about by the microprocessor.

"The thing that is happening right now is that we're drowning in data," said Stan Williams, director of H.P.'s Information and Quantum Systems Lab. "The amount of data is increasing at an absolutely ferocious pace, and unless we can catch up it will remain useless."

If he is right, and the memristor makes possible superdense computing memories -- one computer chip will hold as much data as an entire disk drive holds today.

Forecasting what this next-order-of-magnitude increase will mean is impossible, but it's easy to see what the last one created: entire new product categories -- digital music players, cameras and cellphones.

For Mr. Williams, the speed at which vast amounts of data will be accessed is as important as the amount of storage itself.

An example of what's possible is a project called Central Nervous System for the Earth, or CENSE, he said. Based on as many as a million sensors, CENSE will make it possible to create a far clearer picture of oil and gas reservoirs than previously possible.

The real risk is what happens if Moore's Law fails, said James Tour, a nanoscientist at Rice University.

"If Moore's Law stops, making semiconductors will eventually equal making cars or even shoes," he said, referring to products where performance doesn't increase routinely.

In other words, the only thing that will change about computing is the shape of the tail fins.

Comments

For the latest tech news and reviews, follow Gadgets 360 on X, Facebook, WhatsApp, Threads and Google News. For the latest videos on gadgets and tech, subscribe to our YouTube channel. If you want to know everything about top influencers, follow our in-house Who'sThat360 on Instagram and YouTube.

Related Stories

Popular on Gadgets

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

Latest Gadgets

- Vivo V50 Lite 5G

- Vivo Y19e

- Oppo F29 Pro 5G

- Oppo F29 5G

- Google Pixel 9a

- Realme P3 Ultra 5G

- Realme P3 5G

- Oppo A5 Pro 4G

- Acer TravelLite (2025)

- Asus Zenbook 14 (2025)

- Honor Pad X9a

- Lenovo Idea Tab Pro

- Itel Unicorn Max

- boAt Ultima Prime

- Haier M95E

- Sony 65 Inches Ultra HD (4K) LED Smart TV (KD-65X74L)

- Sony PlayStation 5 Pro

- Sony PlayStation 5 Slim Digital Edition

- Blue Star 1.5 Ton 3 Star Inverter Split AC (IC318DNUHC)

- Blue Star 1.5 Ton 3 Star Inverter Split AC (IA318VKU)

© Copyright Red Pixels Ventures Limited 2025. All rights reserved.