- Home

- Cameras

- Cameras News

- Google, MIT Algorithm Removes Reflections and Obstructions From Photos

Google, MIT Algorithm Removes Reflections and Obstructions From Photos

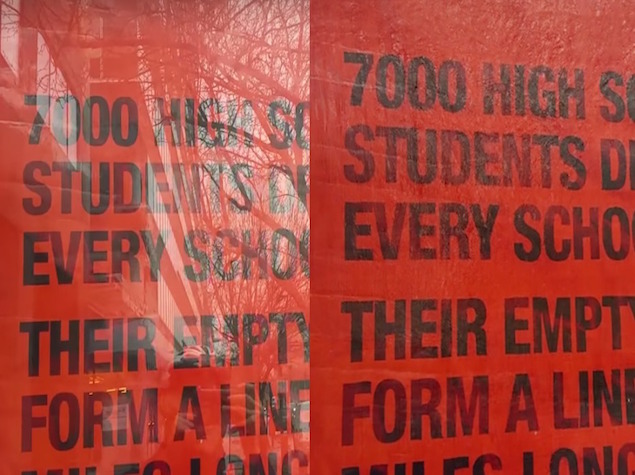

Have you ever tried taking an image of the outside world from the inside of a hotel room? Did your photo also end up with a big reflection of you in the final image and very little of what you wanted to capture? You're not alone. Thankfully, researchers at MIT and Google teamed up to devise an algorithm that removes all such reflections and obstructions from the images.

In their finding titled, A Computational Approach for Obstruction-Free Photography, the researchers detail exactly how the algorithm manages to remove the reflection from the images, and what a photographer needs to do to make that happen. Essentially, a photographer is required to take a short sequence of images while slightly moving the camera between frames.

![]()

The reason a number of photos are required is to make it possible to separate out the reflection, and recover the desired background scene "as if the visual obstructions were not there."

The algorithm in question analyses the obstructions and gets rid off all the reflections. It could work with rain drops, fences and other similar obstructions. In the video (embedded below), the researchers show how accurately they are able to figure out the correct background.

Which leaves us to the most anticipating question you may have: when is it coming to our smartphones? There isn't any concrete information yet. "The ideas here can progress into routine photography, if the algorithm is further robustified and becomes part of toolboxes used in digital photography," the researchers said. We hope the camera companies are planning to get behind this project at the earliest.

For the latest tech news and reviews, follow Gadgets 360 on X, Facebook, WhatsApp, Threads and Google News. For the latest videos on gadgets and tech, subscribe to our YouTube channel. If you want to know everything about top influencers, follow our in-house Who'sThat360 on Instagram and YouTube.

Related Stories

- Samsung Galaxy Unpacked 2025

- ChatGPT

- Redmi Note 14 Pro+

- iPhone 16

- Apple Vision Pro

- Oneplus 12

- OnePlus Nord CE 3 Lite 5G

- iPhone 13

- Xiaomi 14 Pro

- Oppo Find N3

- Tecno Spark Go (2023)

- Realme V30

- Best Phones Under 25000

- Samsung Galaxy S24 Series

- Cryptocurrency

- iQoo 12

- Samsung Galaxy S24 Ultra

- Giottus

- Samsung Galaxy Z Flip 5

- Apple 'Scary Fast'

- Housefull 5

- GoPro Hero 12 Black Review

- Invincible Season 2

- JioGlass

- HD Ready TV

- Laptop Under 50000

- Smartwatch Under 10000

- Latest Mobile Phones

- Compare Phones

- Infinix Note 50s 5G+

- Itel A95 5G

- Samsung Galaxy M56 5G

- HMD 150 Music

- HMD 130 Music

- Honor Power

- Honor GT

- Acer Super ZX Pro

- Asus ExpertBook P3 (P3405)

- Asus ExpertBook P1 (P1403)

- Moto Pad 60 Pro

- Samsung Galaxy Tab Active 5 Pro

- Oppo Watch X2 Mini

- Garmin Instinct 3 Solar

- Xiaomi X Pro QLED 2025 (43-Inch)

- Xiaomi X Pro QLED 2025 (55-Inch)

- Nintendo Switch 2

- Sony PlayStation 5 Pro

- Toshiba 1.8 Ton 5 Star Inverter Split AC (RAS-24TKCV5G-INZ / RAS-24TACV5G-INZ)

- Toshiba 1.5 Ton 5 Star Inverter Split AC (RAS-18PKCV2G-IN / RAS-18PACV2G-IN)